6. Data Privacy-Preserving Techniques

April 15, 2022 lectured by Zümrüt MÜFTÜOĞLU written by Dr. Merve Ayyüce KIZRAK

About Zümrüt MÜFTÜOĞLU

This week, we hosted Zümrüt Müftüoğlu, who works at the Digital Transformation Office of the Presidency of Türkiye and is an expert on data privacy. She talked to us about the balance between privacy and security and the technologies that increase privacy.

“Data is a population problem of the Information Age, and protecting privacy is an environmental challenge.” — Bruce Schneier

Data vs Information

As an example; The number of likes on a social media post is a single element of data. When that’s combined with other social media engagement statistics, like followers, comments, and shares, a company can intuit which social media platforms perform the best and which platforms they should focus on to more effectively engage their audience.

Data Classification

Carnegie Mellon University offers a proposal for sensitivity-based classification of data in the context of information security.

Types and Structures of Data

Location Data — location history of a person,

Graph Data — data relating to a social network, communication network, or physical network,

Time Series — Data contains an element of updating in time, such as census information.

Privacy threats in data analytics

Surveillance: Many organizations including retail, e-commerce, etc. study their customers’ buying habits and try to come up with various offers and value-added services.

Disclosure: The third-party data analyst can map sensitive information with freely available external data sources like census data.

Discrimination: Discrimination is the bias or inequality which can happen when some private information of a person is disclosed.

Personal embracement and abuse: Whenever some private information of a person is disclosed, it can even lead to personal embracement or abuse.

Governments and regulatory agencies can be touted as the most responsible institutions on the subject. Because governments can enforce privacy regulations, they have the ability to ensure that data stakeholders comply with those regulations. Facebook, Instagram, etc. With the inappropriate use of social media applications such as social media applications, users also upload personal data to the public domain, which leads to privacy threats. With the increase in privacy threats and the results, awareness among users has increased. Accordingly, it increased the demand for privacy protection. In this way, countries began to create privacy laws and regulations. The most prominent among these are the European Union’s GDPR (General Data Protection Regulation) and India’s Personal Data Protection law. Some of the practices are shown in the table below, along with the privacy risk.

Security vs Privacy

Security is about protecting data. It means protection against unauthorized access to data. We implement security controls to limit who can access information.

Privacy is about protecting user identity, although it is sometimes difficult to define.

However, we also encounter areas where the two concepts overlap.

Let’s try to clarify the difference with an example. A company you shop with can access many of your personal data. Precautions such as choosing secure systems and software for safe storage against third parties and systems or processing if you have given permission are examined under the heading of security. However, the employees of this company are the cashiers, etc. Determining the conditions of access of personnel to this data can be examined under the title of privacy.

A study examined the security and privacy practices of more than 300 HIV outpatient clinics in Vietnam. The result of the research; “most staff have appropriate safeguards and practices in place to ensure data security; however, improvements are still needed, particularly in protecting patient privacy for data access, sharing and transmission.”*

Privacy Failures in History

Massachusetts Health Records (1990s)

AOL Search Logs (2006)

Netflix Prize (2007)

Facebook Ads (2010)

New York City Taxi Trips (2014)

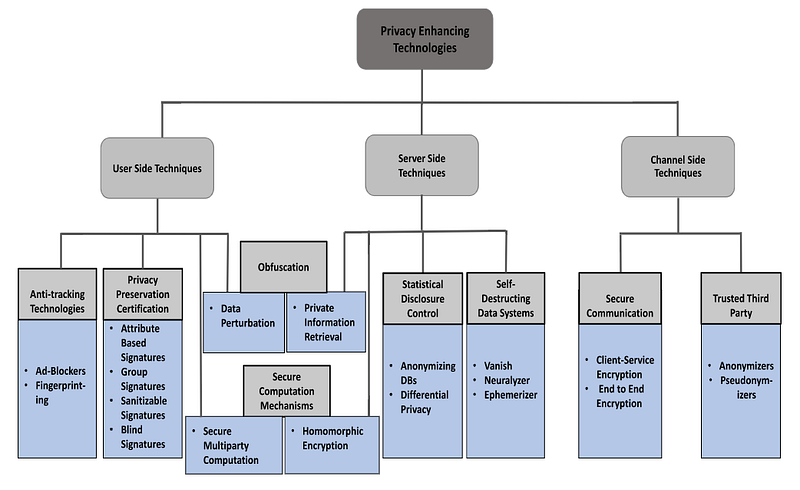

Privacy-Enhancing Technologies(PETs)

Differential Privacy

Differential privacy is a system designed to share general patterns about the group(s) covered by the dataset while keeping information about the individuals in the dataset. Differential privacy is not an algorithm. A system or framework that provides more effective data privacy. We can simply describe the differential privacy approach as follows. A group of individuals has random noise in the range of +100/-100 in their pocket. We don’t want to know who has what. We want to know what we’re left with when this group collects it in their pockets. For example, one of the people in the group has $55 in their pocket and there is a noise of -15 beside it. This means that we get (55+(-15))=$40 and we protect the privacy of the person. People with the same coin but different random noise do not have a regular relationship with each other. This noise can be added to the input or output of the system.

Actually, the magic potion here is; is the law of large numbers in probability and statistics. According to the law, the larger the sample size, the closer it refers to the mean of the entire population. In other words, if there are enough individuals in the dataset when the average of these statistically collected data is taken, it is seen that the noise disappears and the obtained average is close to the real average. A result is a random number in the sum of the data before adding noise. Thus, we have information about the average amount in the pocket of the individual. But at the same time, we do not know the amount in each individual’s pocket. So we provide privacy. We can think the same for cancer and healthy patients, as shown in the figure, instead of the amount of money in their pocket. Those who want to dive deeper into the subject should check the reference of the figure.

Source: Extra explanation by Nicolas Papernot and Ian Goodfellow according to student’s questions: Privacy and machine learning: two unexpected allies?

Homomorphic Encryption

It is another method of providing input privacy for AI. Homomorphic encryption is a type of encryption that allows computation on encrypted data. Homomorphic encryption poses privacy concerns, not to protect data owners, but to model owners and users’ valuable intellectual property with their data. Therefore, if the model is to be used in an unreliable environment, it is preferred to keep its parameters encrypted.

Advantages:

It can infer on the encrypted data so that the model owner never sees the customer’s private data and therefore cannot leak or misuse it.

It does not require interaction between data and model owners to perform the calculation.

Disadvantages:

It requires high computing power.

It is limited to certain types of calculations.

Source: Here’s a link to Andrew Trask’s article on privacy-preserving security if you want to dive deeper.

Federated Learning

Sharing and processing the huge amount of data needed for AI often poses privacy and security risks. Another method to overcome these challenges is Federated Learning.

Federated Learning is an approach to bringing code to data rather than bringing data to code. Thus, it addresses issues such as data privacy, ownership, and locality.

Certain techniques are used to compress model updates.

It does quality updates instead of simple gradient steps.

Noise is added by the presenter before performing the aggregation to hide the influence of an individual on the learned model.

If gradient updates are too large, they are clipped.

Federated learning differs from decentralized computing:

Client devices such as smartphones have limited network bandwidth.

Their ability to transfer large amounts of data is poor. Usually, the upload speed is lower than the download speed.

Client devices may not always be suitable for participating in an educational environment. Internet connection quality, charging status, etc. conditions must be suitable.

The data available on the device is updated quickly and is not always the same.

For client devices, not participating in the training is also an option.

The number of client devices available is huge but inconsistent.

Federated learning provides distributed education and aggregation across a large population and privacy protection.

Data is often unstable as it is user-specific and auto-correlated.

Zümrüt Müftüoğlu, who was the guest in our lecture, emphasized that there is a trade-off between privacy and data sharing. Next week we will discuss legal cases and approaches to IoT applications in our lecture.

References:

Last updated