3. Concepts of AI Ethics

March 25, 2022 lectured by Dr. Cansu CANCA and written by Dr. Merve Ayyüce KIZRAK

About Dr. Cansu CANCA

In this week’s lecture, philosopher and AI ethicist Dr. Cansu Canca was the guest lecturer. It was a thought-provoking and instructive lecture with questions, problems, and discussions.

“Sometimes respecting people means making sure your systems are inclusive such as in the case of using AI for precision medicine, at times it means respecting people’s privacy by not collecting any data, and it always means respecting the dignity of an individual.” — Joy Buolamwini

“Ethics is the branch of philosophy that systematically analyzes and determines what is right and wrong. Defining the value judgments we assume and analyzing whether they are appropriate for a given case fall within the field of applied ethics. The value judgments in the use cases of AI that we talk about raise the question of whether these technologies are used ethically/correctly. For example, given that our access to information is now almost exclusively through platforms such as Google, Facebook, and Twitter, the value judgments in the algorithms of these platforms end up largely shaping our world view and thus, our world. Similarly, not showing high-income job opportunities to a certain segment of the society causes this segment to be deprived of certain job opportunities (without even being aware of it), and thus leads to injustice. These are just some of the ethical questions/problems posed by the widespread use of AI.” *

Applying Ethics to AI Technologies

First, we discussed the issue of ethics through search engines in the online class but you can think of many examples.

How do the AI apps/systems work and where is the ethics component?

In our discussion of search engines, different examples were discussed, one of which was the coronavirus vaccine. E.g; I might want to search for the places where I can receive coronavirus vaccines around my neighborhood, but the search results might include (even prioritize) other coronavirus-related information such as conspiracy theories or the celebrities who are vaccinated. But prioritizing false or not crucial information might have dangerous and harmful effects on individuals and on society.

This brings us to the question of “relevance” — how do we code relevance and what value judgments are made in interpreting relevance? In our discussion over many different search examples, the following topics emerged.

We discussed the difference between looking for information we already know, searching for information we never knew, and being satisfied with the search results. While we’ve discussed search engine results, which are often associated with social media, we’ve actually decided that there must be a balance between the user being happy and satisfied and the results not harming the world.

Another key term in ethics is “autonomy”. The basic idea here is that as rational beings, we can and should be allowed to make our own decisions and have self-governance. Every individuals’ autonomy must be respected — which means that manipulating people or forcing them is ethically wrong. But I don’t need to use force to violate your autonomy. I can also restrict and shape the information you have. By changing the information you have access to, I can impact your decision-making and manipulate your behavior. Search engines, being platforms that shape and present information, have the capacity to impact individual autonomy. And in creating these platforms and the AI systems within them, questions related to harm, autonomy, and fairness are unavoidable.

Another topic we discussed in relation was the consequences of bias in data and/or AI models for many different applications.

If you want to see examples of what bias is and how we can come across it, you can review this article that I have published before:

The bias in the AI apps/systems we’re talking about can appear unintentionally across races, genders, social statuses, and so on, and can have a growing effect when unnoticed. So we need to answer some questions.

What is Ethics and what is AI ethics?

The core questions of ethics are: What is the right/good thing to do? What is the right/fair system to implement?

The scope of AI ethics can be presented as the following:

Content/product: Developing ethical technologies

Process: Ensuring ethical processes for tech development

Implementation: Using and implementing tech ethically

AI ethics principles

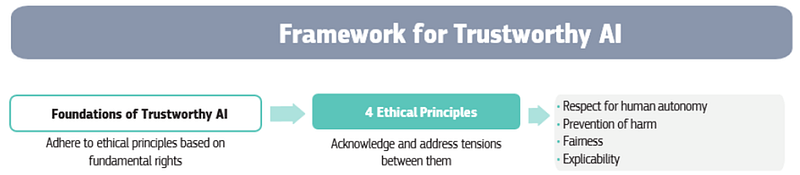

There are many AI principles published around the world, and one of the best documents ever came from the European Commission. The way they have and the structure they determine are made up of 4 basic ethical principles: respect for human autonomy, prevention of harm, justice, fairness, and explicability. Three of these principles are traditional bioethics principles (beneficence and non-maleficence are often merged into a single principle of prevention of harm). Explicability is an added principle that is meant to enable the other principles in the context of AI.

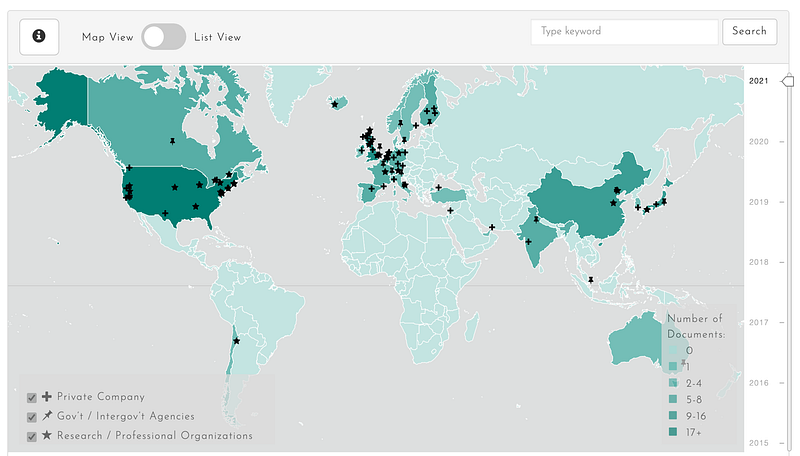

The tool in the image below (The Box) developed by AI Ethics Lab, where Cansu Canca is the founder and director, is one of the Lab’s major projects. It is part of a toolkit (Dynamics of AI Principles) that systematizes and analyzes all AI principles published around the world. Most companies and countries by now have put forth their AI principles. The toolkit Dynamics helps us understand how these principles develop over time and in different geographies, and how they compare with each other. The Box takes one step further and puts these principles in a conceptually coherent and practically applicable format. The goal of this tool is to help practitioners and researchers ask each of the questions associated with the principles and evaluate how their work rates ethically.

We must aim to create inclusive, equitable policy and technological innovation to contribute to securing benefits with a balance of benefit and harm, and to reduce the risks of AI for all people and the world we share. In this direction, next week, we will talk about how ethical principles can be applied technically one by one.

Last updated