10. Human Rights and AI

May 13, 2022 lectured by Dr. Eren SÖZÜER written by Dr. Merve Ayyüce KIZRAK

About Dr. Eren SÖZÜER

In our lecture, while discussing human rights in AI, we hosted a legal expert Dr. Eren Sözüer, who works at Istanbul University as an academic.

"A right delayed is a right denied." —Martin Luther King

We don't yet know exactly what AI will mean for the future of society, but we can act now to build the tools that we need to protect people from its most dangerous applications.

What are Human Rights?

As we usually do, we started the lecture with brainstorming this week. The answers received from the students we asked what comes to mind when you think of human rights are as follows:

Rules and qualifications that help improve the quality of life spread justice and equality all over the world.

Principles and standards ensure that people are treated fairly and morally.

It is like an umbrella covering some standards and regulations to protect people.

Moral principles or norms are often protected in international law for human behavior.

It is a set of rights that every person has from the moment of his/her birth.

It can also mean how people want to be treated.

They are the rules of living respectfully with each other.

Human rights are rights that are mandatory for all human beings, regardless of race, gender, nationality, ethnicity, language, religion, or any other status.

These are rights that must be protected by law and ethically.

It means treating people with fairness, respect, equality, and dignity as they say in their lives and decisions.

Right to life, prohibition of torture, right to privacy, nutrition, education, right to work, etc. all statements are true. Human rights rather express what should be, not what is in this field.

Human rights;

Universal,

Inalienable,

Indivisible and interdependent.

How Does AI Affect Human Rights?

Recently, for most of society, the impact of AI systems on humans is uncertain. Besides hopes that AI can bring "global goodness," there is evidence that some AI systems are already violating fundamental rights and freedoms.

So, stakeholders in the AI ecosystem, and international human rights be used as a "North Star" to guide the development process of AI technologies?

It may be possible to benefit from international human rights in order to identify, prevent and eliminate important risks and harm reasons.

With a human rights-based framework, normative and legal guidance to protect human dignity and values regardless of the national order of countries can be provided and can be simply defined as:

“In order for AI to benefit the common good, at the very least its design and deployment should avoid harms to fundamental human values. International human rights provide a robust and global formulation of those values.”*

Generally, the field of AI is evaluated on the five pillars of human rights.

Non-discriminate,

Equality,

Participation,

Privacy,

Freedom of expression.

We consider how AI applications have been involved in a number of recent controversies. Some positive progress has already been made, despite some instances of harming rights. It goes without saying that stakeholder engagement is important when we consider AI and human rights together. Let's take a look at a few examples from the recent past.

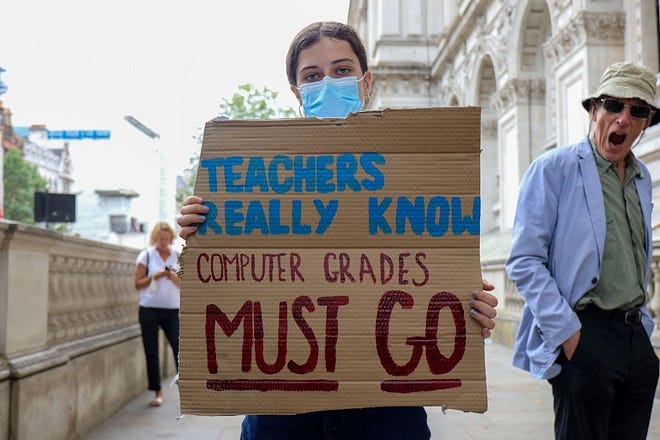

United Kingdom’s university qualification algorithm

The UK government has used a computer-generated grading system to replace exams that have been canceled during the pandemic when schools are often closed. It was determined that most of the students had a decrease in their grades. Many of them have had their college admissions canceled. Whether it is right to rely on algorithms in making such decisions that directly affect the public and their future has led to debates. In this process, British youth attracted attention with protests.

“Experts said the grading scandal was a sign of debates to come as Britain and other countries increasingly use technology to automate public services, arguing that it can make government more efficient and remove human prejudices.” — The New York Times

ARTICLE 19: "Ban the design, development, sale, and use of emotion recognition technologies with immediate effect."

Dutch welfare fraud detection system “SyRI”

AI technologies, which governments increasingly use, also cause some debates. SyRI, an app that detects welfare fraud, was immediately discontinued by court order in the Netherlands. This had an echo beyond the Netherlands. Unfortunately, this type of AI application; can become practices developed without the participation of all stakeholders, targeting the poor people, violating privacy and human rights norms, and unfairly punishing the most vulnerable.

“The system did not pass the test required by the European convention on human rights of a “fair balance” between its objectives, namely to prevent and combat fraud in the interest of economic wellbeing, and the violation of privacy that its use entailed, the court added, declaring the legislation was therefore unlawful. The Dutch government can appeal against the decision.”— The Guardian

Certainly, you should also be aware of this; human rights cannot address all current and unforeseen concerns about AI. Recent work in this area should focus on how a human rights approach can be applied in practice through policy, practice, and organizational change.

The Governing AI report by Data & Society offers some initial human rights recommendations:

Technology companies should seek effective communication channels with local civil society groups and researchers, particularly in geographic areas where human rights concerns are high, to identify and respond to risks associated with AI deployments.

Technology companies and researchers should conduct impact assessments on human rights throughout the lifecycle of AI systems. This impact assessment approach should be updated by researchers in line with the developments in AI technology. Tools should be developed so that assessments can be made easily.

States should adopt global human rights obligations in their national AI policies. They should be involved in international studies. Because human rights principles are not written as technical specifications, human rights lawyers, policymakers, social scientists, computer scientists, and engineers must work together to make human rights functional in business models, workflows, and product design.

Academicians should be encouraged to conduct and publish further research on the subject. Human rights and legal scholars must work with other stakeholders to balance rights when faced with certain AI risks and harms.

United Nations human rights researchers and special rapporteurs must continue to investigate and publicize the human rights impacts that result from AI systems.

As AI applications become a part of our daily lives, unlike most other technologies, their impact on human rights increases. This usually happens in the form of intervention. Therefore, the focus is on discussing potential risks and developing solutions while the technology is still in the development phase. One of the organization that does this is Access Now. It is necessary to start examining now what safeguards and structures are needed to address problems and abuses, as well as the benefits AI technology can bring to humans. The worst harms, including those that disproportionately affect marginalized people, can be prevented and mitigated. In addition, solutions presented in the form of human rights laws and recommendations can contribute to establishing a framework. Suggestions generally fall into four categories:

Developing data protection rules to protect rights in datasets used to develop and feed AI systems;

Creating specific safeguards for government uses of AI;

Taking security measures for private sector use of AI systems;

Investing in more research to continue examining the future of AI and its possible interferences with human rights.

Depth in rights

Types of rights

The impact is multidimensional

Rights of vulnerable groups; Children, Women, Persons with special needs, Racial minorities

What can human rights law help us achieve?

Certainty and uniformity

Stability

Accountability

Enforcement and redress

Some takeaways

Ethics as a first step

Ubiquitous and ongoing ratification

Bias and discrimination

Lack of transparency and accountability

Techno-optimism

Digital Rights Organizations (non-exhaustive list)

References:

Last updated